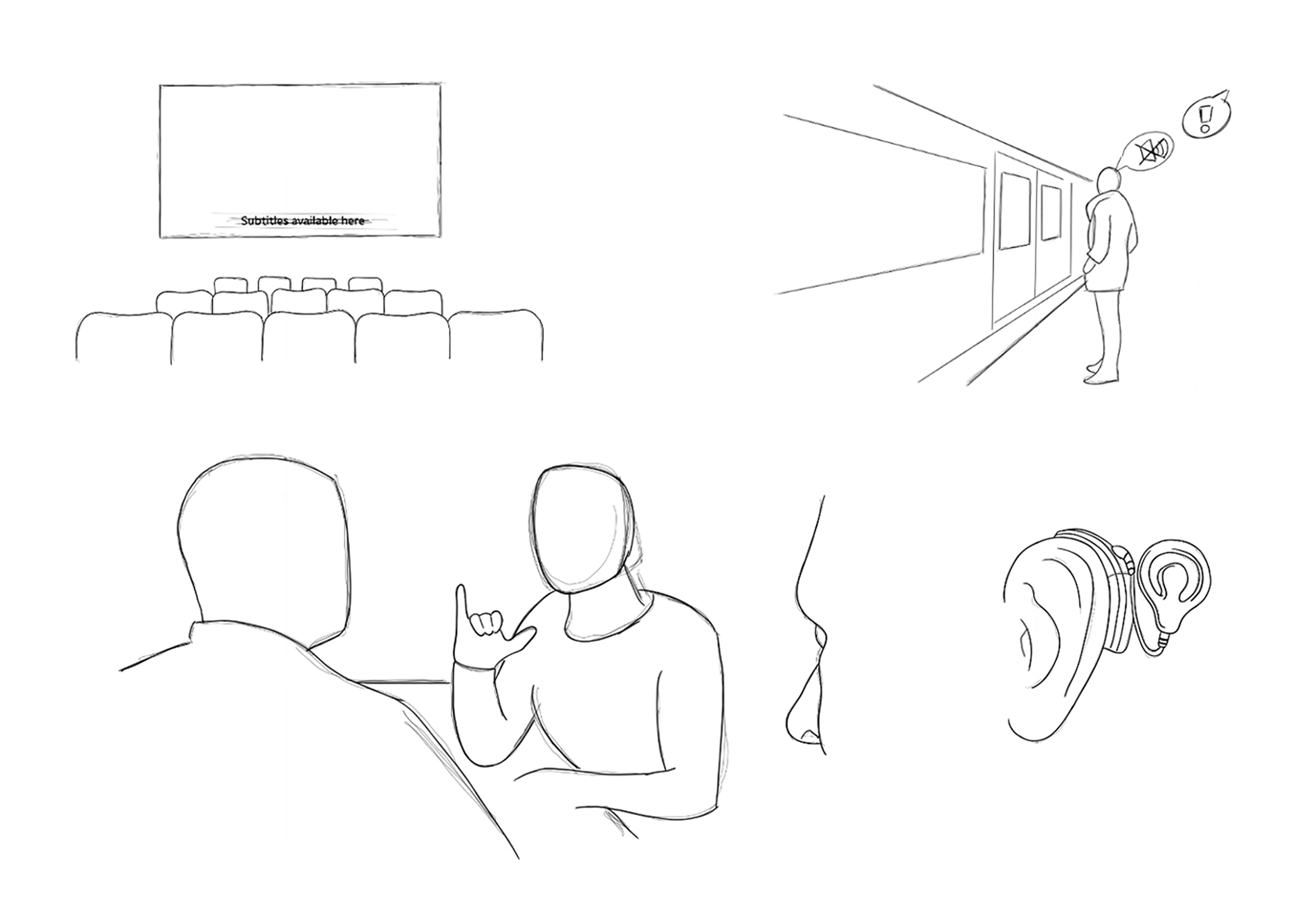

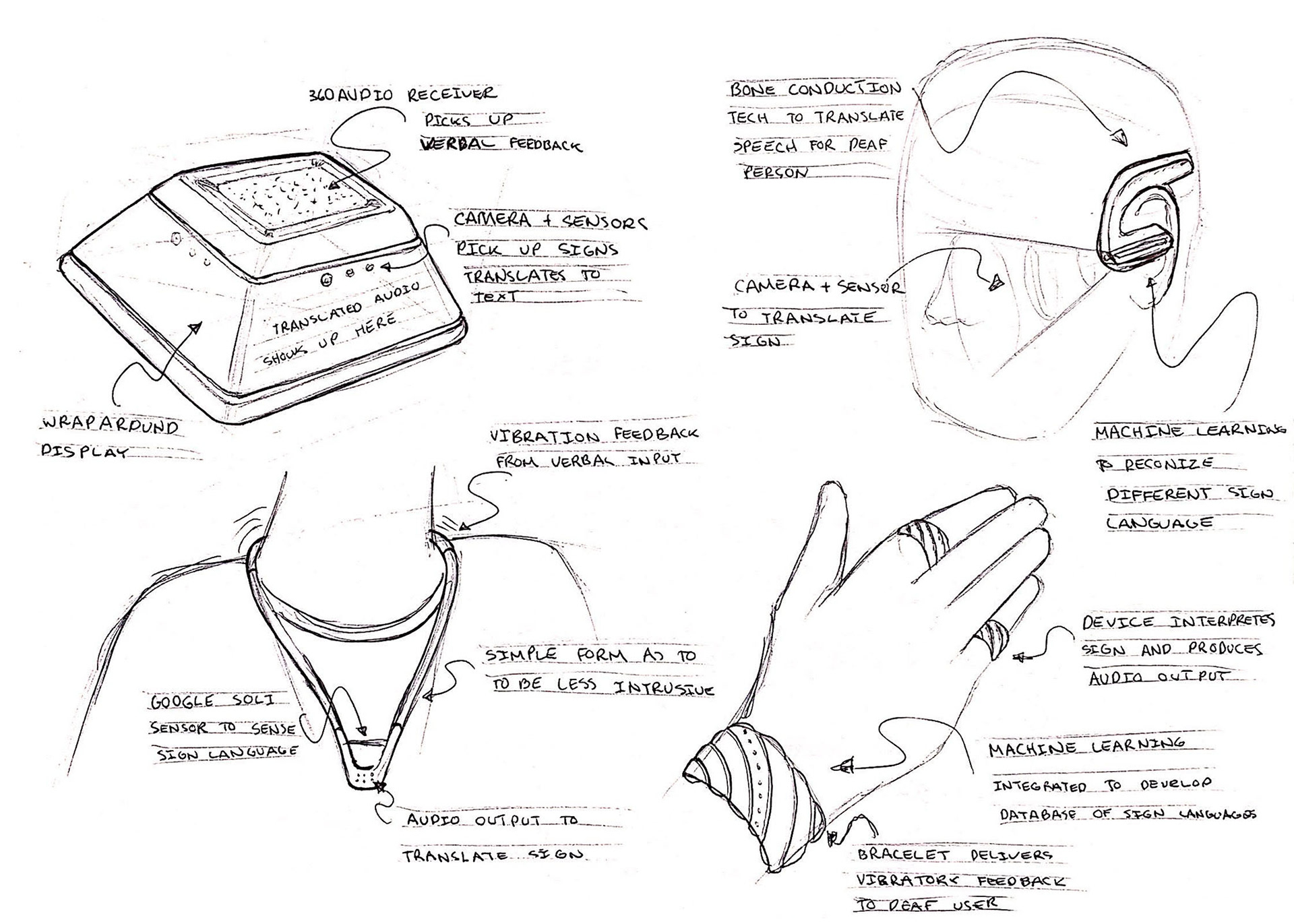

Our initial problem space revolved around aiding deaf/ hard of hearing people communicate with the hearing world. Making use of A.I and machine learning to translate their sign to an audio output.

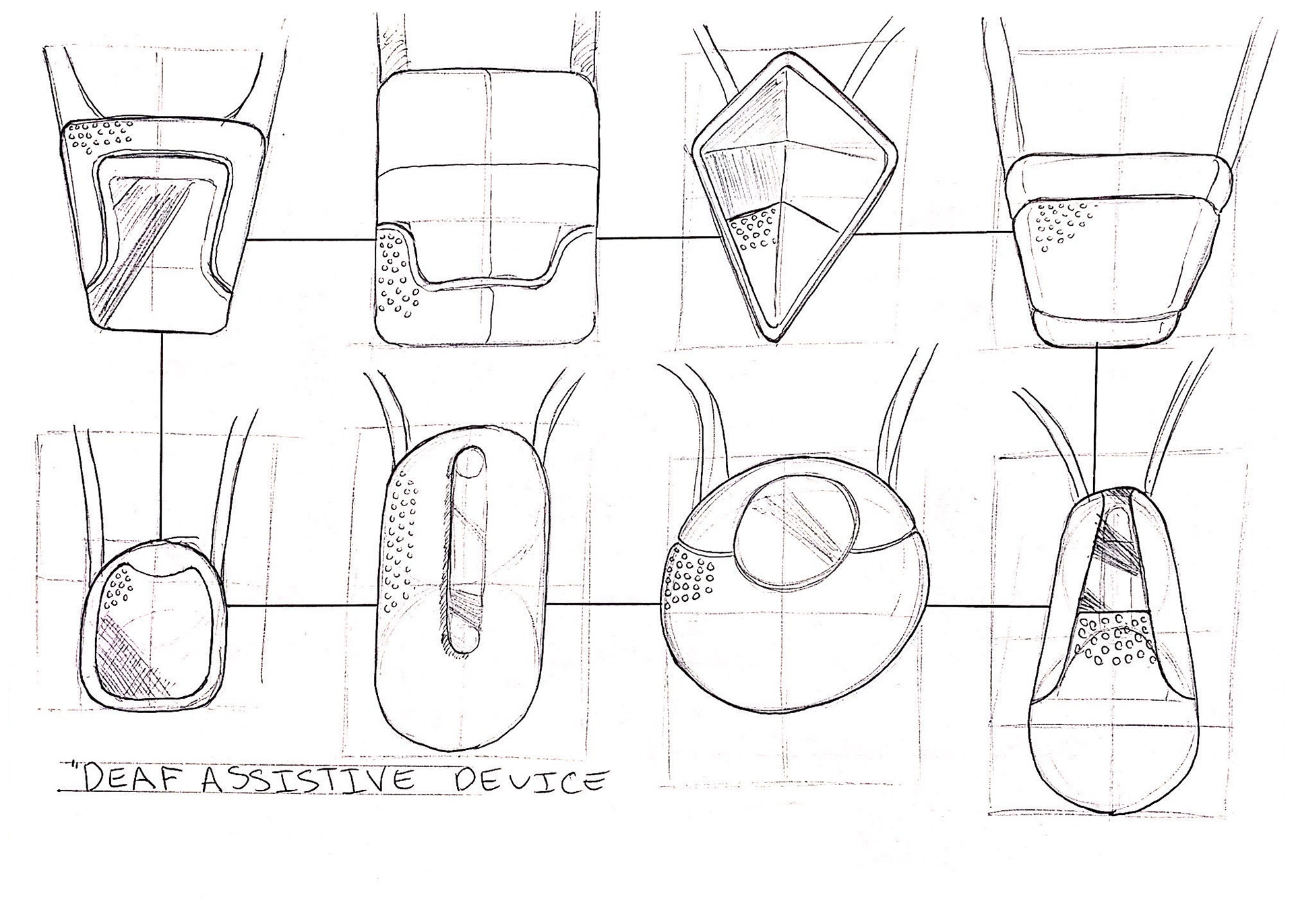

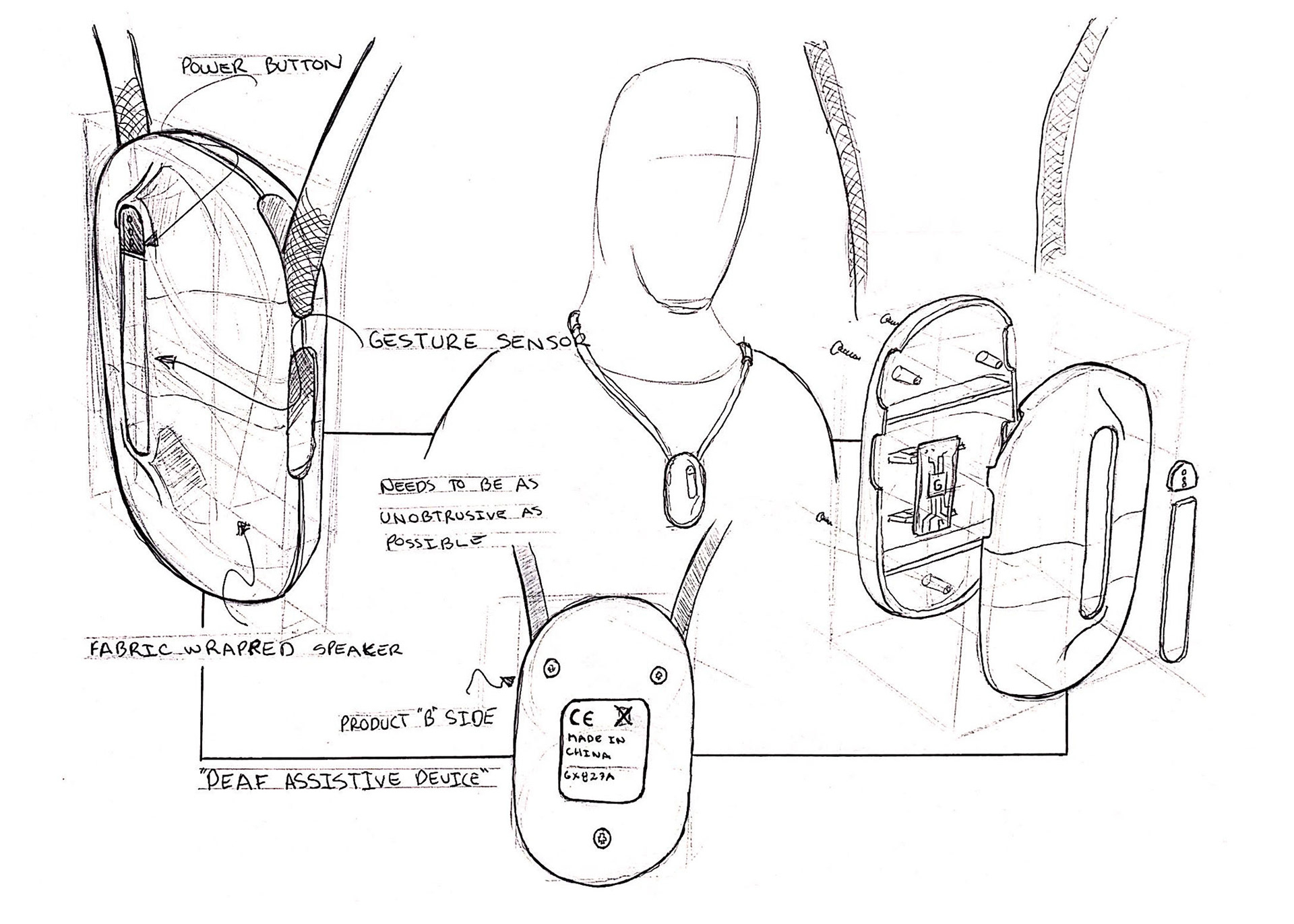

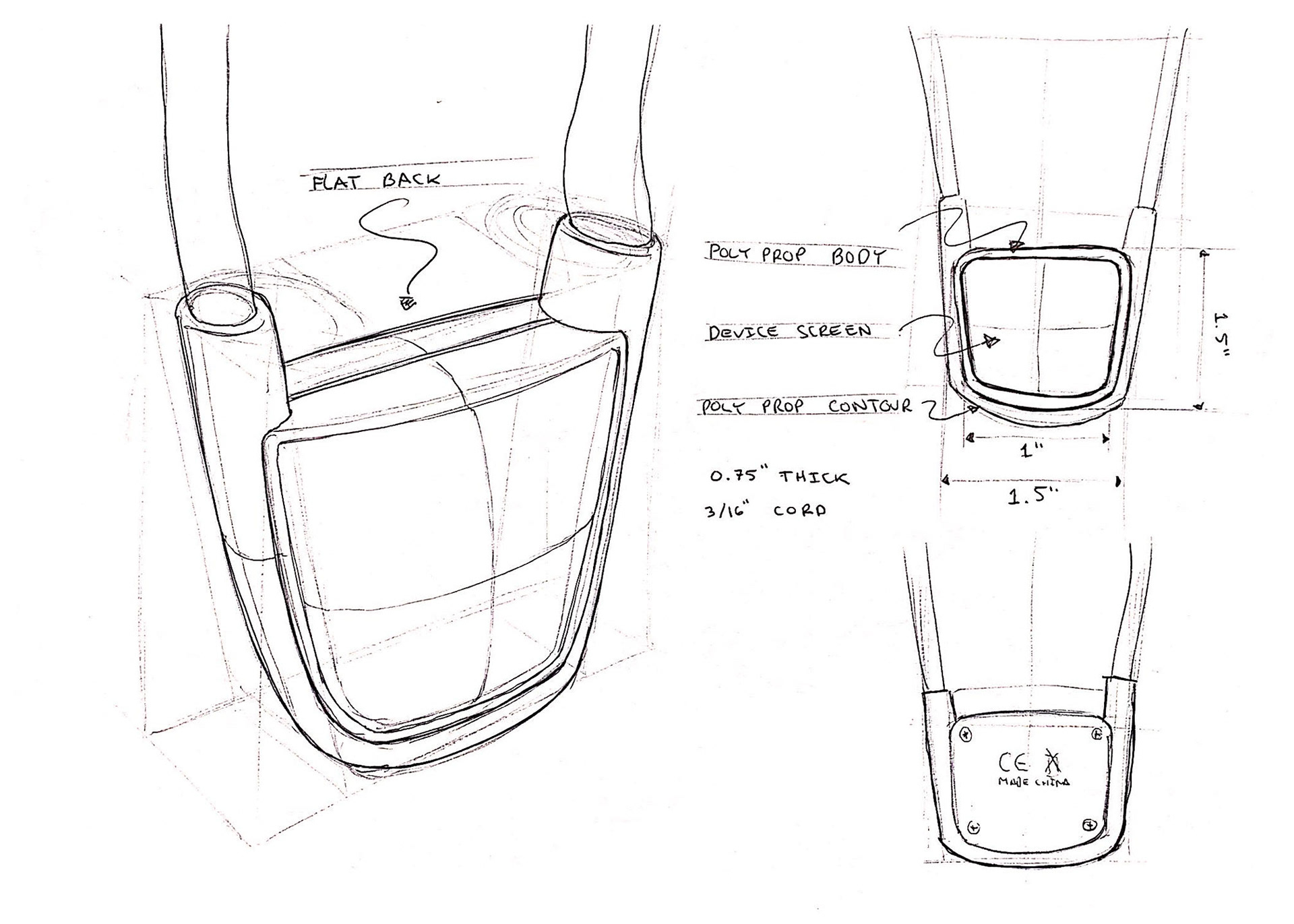

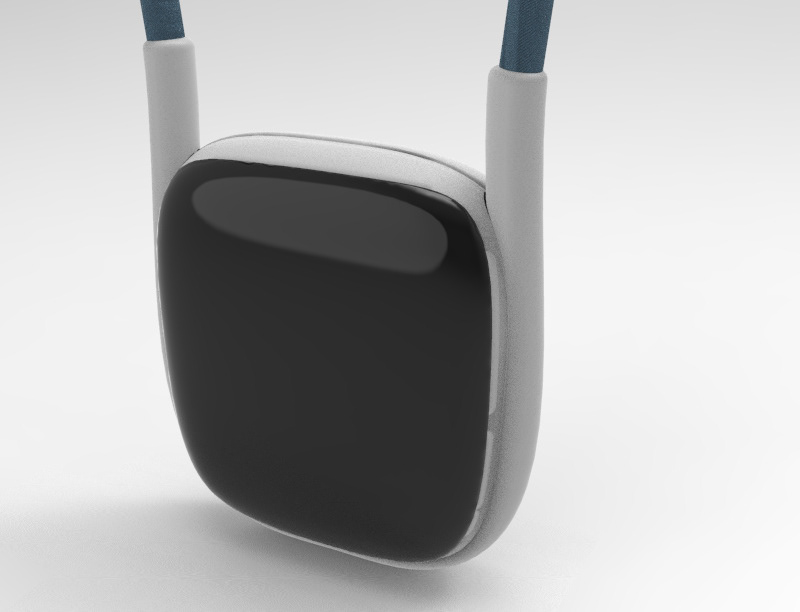

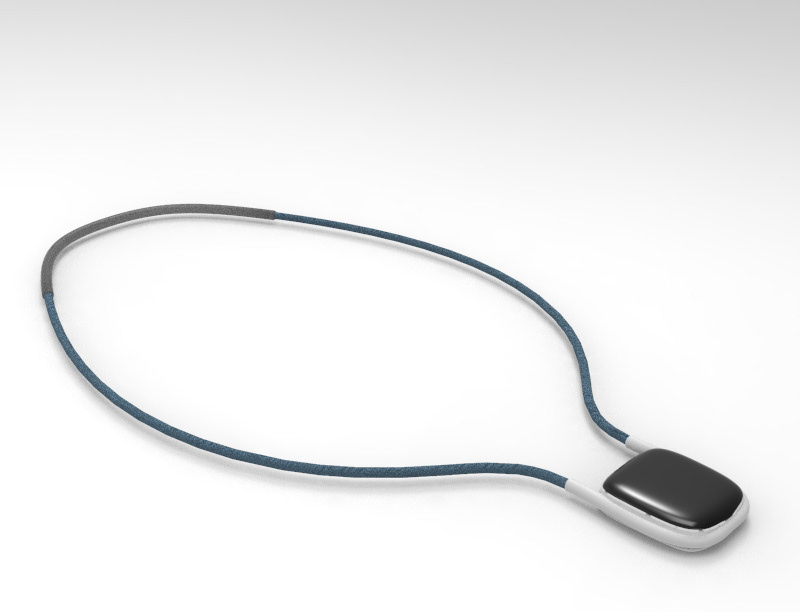

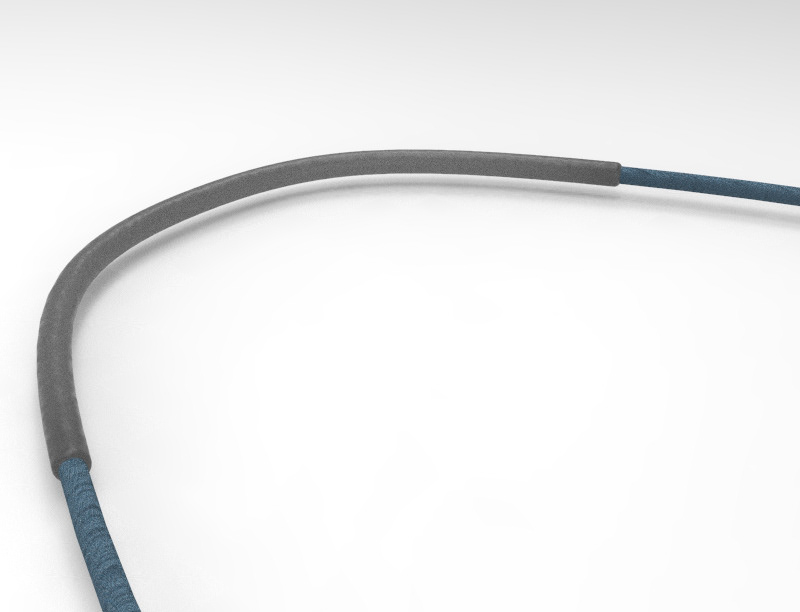

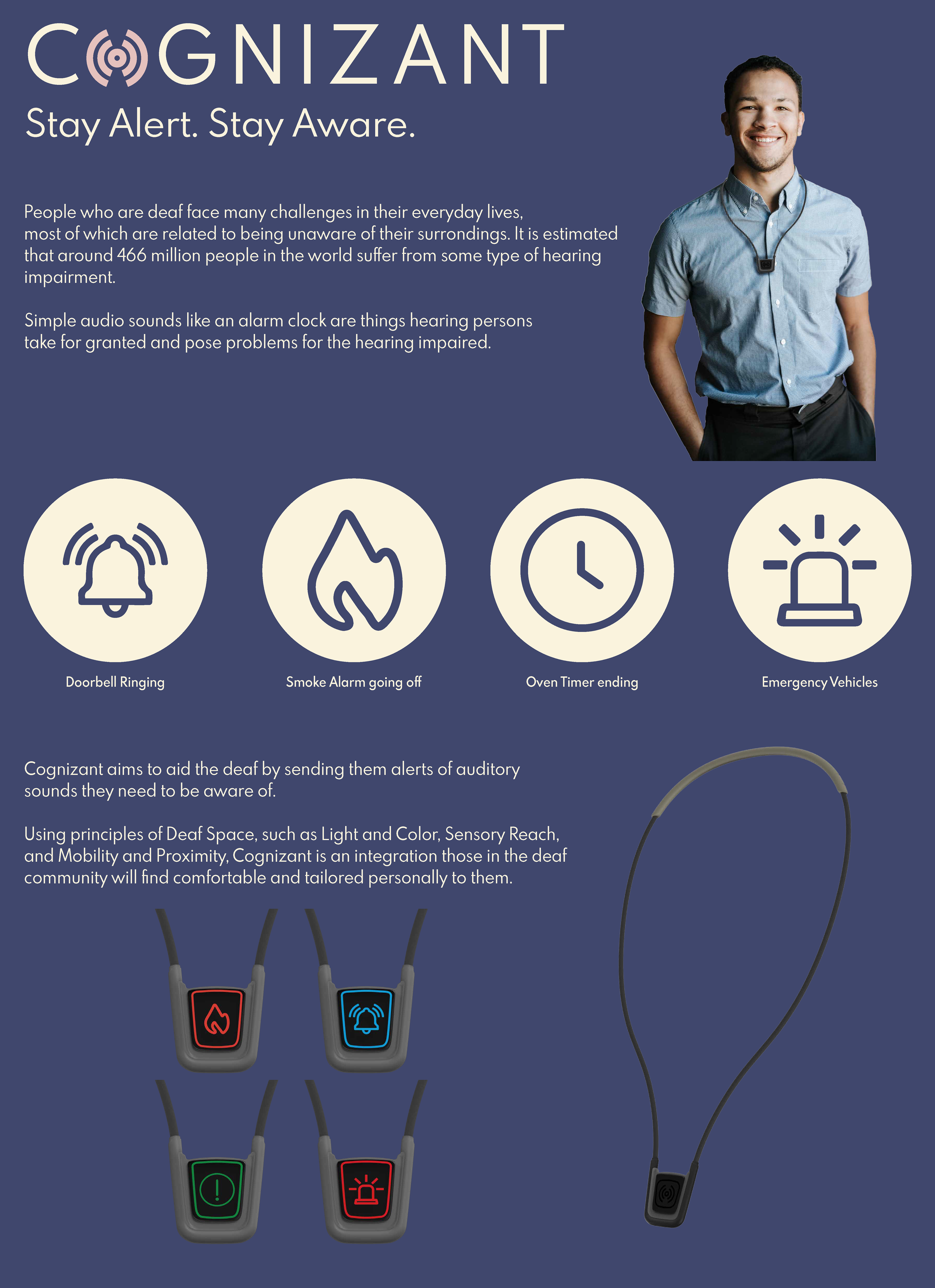

We ended up on a wearable necklace device that could be worn casually and try not to bring too much attention to the wearer when not in use.

To gain better insight into the problems and needs of the deaf community we scheduled some interviews. Our first with a professor from George Brown College in the School of Deaf Studies who herself was deaf. The second with two accessibility coordinators at Humber College.

After our interviews we realized something very important that went against our inherent bias. For the most part deaf people are proud to be deaf. Meaning they are part of the interesting world of deaf culture. We learned that sign language especially American Sign Language (ASL) is roughly 70% facial expression and 30% hand gestures. It is this reason alone the majority of the deaf community is against tech like the ASL gloves.

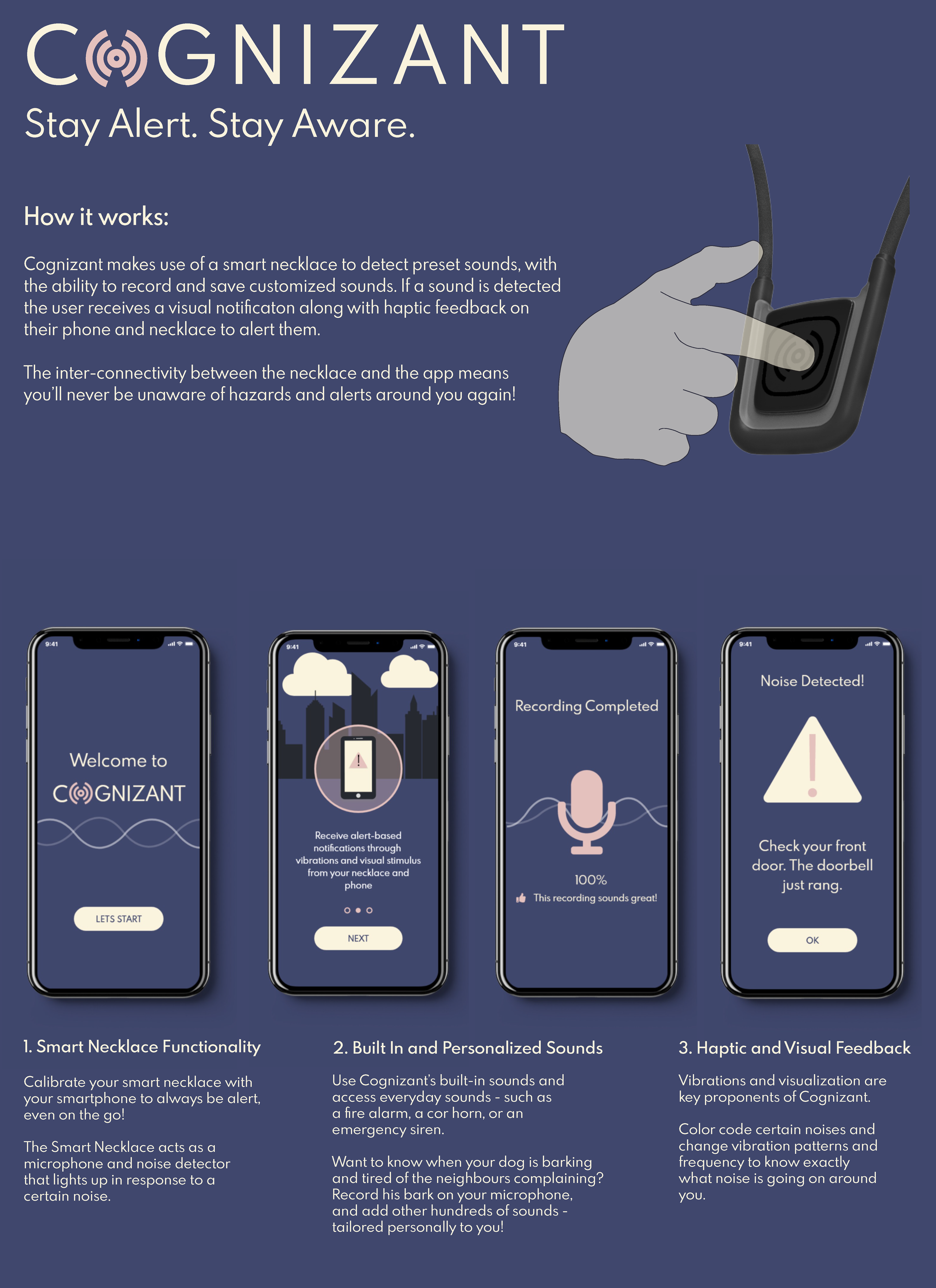

Final App On boarding Screen + Smart Necklace

Final App UI

Due to this discovery we decided to alter our problem space and design to fit our new understandings. Creating instead an alert system to simply aid the deaf in situations where important audio cues are present. My group of UX designers worked on the accompanying app UI. I pitched in with aiding to spot any small fixes to be made and with colours.

Overall we we're quite happy with the overall results and gained some valuable insight into the world of deaf culture.